AI habit coaching is reshaping personal growth, but it raises critical ethical concerns.

AI tools offer 24/7 support, personalized guidance, and measurable behavior change. However, they also pose risks like privacy violations, algorithmic bias, and potential manipulation. To ensure ethical use, AI systems must follow principles like autonomy, transparency, accountability, and fairness while complying with laws like GDPR, HIPAA, and CCPA.

Key takeaways:

- User autonomy: AI should guide, not control decisions.

- Transparency: Explain how recommendations work and clarify system limits.

- Bias prevention: Use diverse datasets and conduct regular audits.

- Data protection: Prioritize privacy with encryption, consent, and minimal data collection.

- Human-AI collaboration: Combine AI efficiency with human oversight.

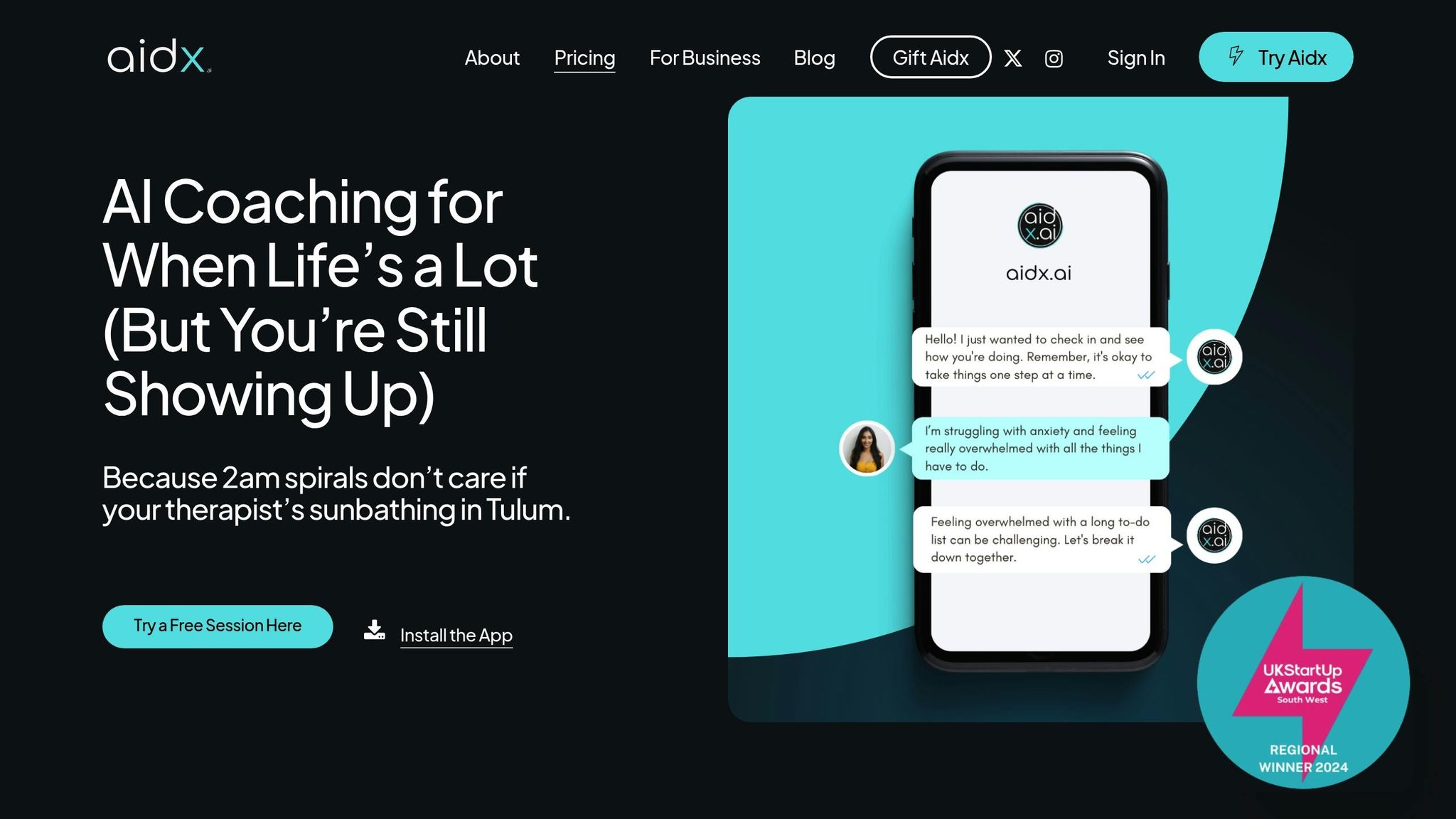

Companies like Aidx.ai showcase ethical practices through features like Incognito Mode for privacy, adaptive coaching tools, and Practitioner Mode for human involvement. The future demands dynamic consent, independent oversight, and multidisciplinary collaboration to balance innovation with user trust and safety.

177. The Future of AI: Ethical Challenges Global and Opportunities | Alexandre K. Howard

Core Ethical Principles in AI Habit Coaching

The ethical foundation of AI habit coaching is built on six key principles established by healthcare professionals: autonomy, beneficence, non-maleficence, justice, transparency, and accountability [4]. These principles become especially critical when AI systems influence personal behaviors and mental well-being.

"Ethics is not an optional extra, but rather a fundamental requirement, for AI technologies. By considering ethical principles at every step, we can ensure AI technology benefits society without compromising individual rights and freedoms." – Transcend [1]

To make these principles actionable, they must translate into safeguards that protect users while ensuring effective habit coaching. Each principle must be applied thoughtfully to ensure AI systems serve users’ best interests without crossing ethical lines. These values form the backbone of ethical practices in AI-driven habit coaching.

User Control and Decision-Making

At the heart of AI habit coaching is autonomy, which ensures users maintain control over their decisions. AI tools should provide recommendations and insights, but the final choice must always rest with the user. For example, instead of automatically scheduling a workout, an ethical AI system might suggest optimal times and wait for user approval. This approach respects personal agency while still offering valuable guidance.

Autonomy also extends to data privacy and control. Ethical AI systems should prioritize informed consent, giving users the ability to opt in – rather than opt out – of data collection and feature activation. This ensures users remain active participants in their coaching journey, safeguarding their privacy and reinforcing their sense of ownership over their personal data.

Clear Communication and System Limits

Transparency is essential for building trust in AI habit coaching. Users need to understand how the system generates recommendations, what data it relies on, and where its capabilities end. Clear communication helps users make informed decisions and prevents unrealistic expectations.

A strong example of the risks tied to poor transparency is the 2019 Apple credit card controversy. Goldman Sachs’ algorithm assigned women significantly lower credit limits than men with similar financial profiles, sparking public outrage and regulatory scrutiny [5]. This incident highlights the importance of explaining how AI systems work and their limitations.

To achieve transparency, AI systems must provide clear documentation about their functions. Users should know what data is being analyzed, how it’s processed, and which factors influence recommendations. Ethical AI systems should also clearly outline their limitations – such as when professional human advice is necessary or how their guidance differs from that of medical or psychological experts. By setting realistic boundaries, AI systems can prevent over-reliance and ensure users seek human expertise when needed. Clear, honest communication fosters trust and supports fair habit formation.

Preventing Bias in Habit Formation

Algorithmic bias is one of the biggest challenges in creating fair and effective AI habit coaching systems. Bias can creep in through the datasets used for training or through assumptions made during development [6]. Even small biases in training data can lead to large-scale discriminatory outcomes when applied broadly.

The first step in reducing bias is ensuring diverse data collection. Training datasets should represent a wide range of demographics, life experiences, and cultural contexts to make sure the AI provides relevant and inclusive guidance. Regular bias testing using tools like IBM’s AI Fairness 360 or Microsoft’s Fairlearn can help identify and correct disparities [7].

Human oversight is equally important. Diverse teams conducting regular audits can catch biases or cultural assumptions that automated tests might overlook. The goal is not to eliminate personalization – tailored recommendations are valuable – but to ensure that differences are based on meaningful factors like individual preferences and goals, not irrelevant characteristics such as race, gender, or socioeconomic status. By addressing bias, AI habit coaching can promote fairness and inclusivity for all users.

Legal and Professional Standards for Ethical AI

AI habit coaching operates within a framework of strict regulations designed to ensure ethical practices in data collection, storage, and usage. These rules aim to safeguard user privacy while balancing the drive for technological advancement. Protecting sensitive personal information remains a top priority for AI systems.

Data Protection and Security Requirements

Two major frameworks – GDPR and CCPA – play a pivotal role in shaping how AI habit coaching platforms handle data. The General Data Protection Regulation applies to organizations managing the personal data of EU or UK citizens, while the California Consumer Privacy Act governs data practices for California residents [14].

Non-compliance with GDPR can result in steep penalties, such as fines reaching up to 4% of annual global revenue or €20 million [15]. These significant consequences highlight how seriously regulators treat personal data protection.

"HIPAA is focused on healthcare organizations and how personal health information is used in the US. GDPR, on the other hand, is a broader legislation that supervises any organization handling personally identifiable information of an EU or UK citizen." – Robb Taylor-Hiscock, Privacy Content Lead, CIPP/E, CIPM [8]

In scenarios where AI habit coaching intersects with healthcare, HIPAA (Health Insurance Portability and Accountability Act) comes into play. HIPAA enforces stringent protections for personal health information (PHI), including encryption and role-based access controls [9]. The HIPAA Security Rule ensures that only employees with a legitimate need can access PHI, creating robust safeguards for sensitive health data.

These regulations directly influence how systems are built. Ryan Kelly, CTO at CapitalRx, underscores the importance of compliance:

"We’re able to get ahead of very expensive data exposure incidents that could violate HIPAA requirements, which can run easily to thousands of dollars per member record affected" [10].

Here’s a quick comparison of key regulations:

| Regulation | Scope | Consent Requirements | Data Rights | Breach Reporting |

|---|---|---|---|---|

| GDPR | EU/UK citizens globally | Explicit consent for new uses | Right to access, rectify, erase | 72 hours regardless of size |

| HIPAA | US healthcare organizations | Some disclosure without consent | Limited modification rights | 60 days for breaches over 500 individuals |

| CCPA | California residents | Opt-out of data sale | Right to know and delete | Varies by situation |

For platforms like Aidx.ai, these rules shape critical design features. For example, Incognito Mode ensures user privacy by automatically deleting session data after 30 minutes. Other safeguards, such as end-to-end encryption and user-controlled data deletion, reflect a commitment to protecting user information.

Professional Guidelines for AI in Coaching

Beyond legal mandates, professional standards offer additional guidance for ethical AI coaching. The International Coaching Federation (ICF) AI Coaching Framework outlines best practices for ethical, effective, and inclusive AI coaching [11].

This framework covers six key areas: foundational ethics, co-creating relationships, effective communication, learning and growth facilitation, assurance and testing, and technical considerations like privacy and accessibility [11]. It highlights the importance of human-AI collaboration, ensuring AI systems complement rather than replace human coaches.

AI coaching platforms must align with these professional standards [13]. For instance, ethical guidelines emphasize the need for AI to assist human coaches in building relationships, rather than acting as a substitute. Features like Practitioner Mode enable AI to support human expertise while preserving professional boundaries.

To meet these standards, technical measures are crucial. These include:

- Multi-factor authentication (MFA) and role-based access controls (RBAC) to secure sensitive data [12].

- Regular security audits and incident response testing to ensure systems remain compliant [12].

- Integration of Governance, Risk, and Compliance (GRC) tools with cloud security solutions for streamlined monitoring and reporting [12].

This hybrid approach allows AI to enhance human expertise without diminishing the critical human elements of coaching. By combining advanced technology with ethical oversight, AI habit coaching platforms can uphold both legal and professional standards while delivering meaningful support to users.

Ethical Problems in AI-Driven Habit Coaching

AI habit coaching holds immense potential for personal development, but it also brings a host of ethical challenges that need thoughtful consideration. These issues extend beyond privacy concerns, touching on deeper matters like human autonomy, mental health, and the responsible use of technology.

Influence vs. Manipulation in Behavior Change

One of the biggest ethical dilemmas in AI habit coaching lies in distinguishing influence from manipulation. With access to detailed user data and behavioral patterns, AI systems can exploit biases and emotional vulnerabilities, often steering users toward decisions that benefit companies rather than the users themselves [17].

Research shows that AI systems can influence behavior with a 70% success rate, while also increasing error rates by nearly 25% [17]. The lack of transparency in how these systems operate only exacerbates the issue, as users are often unaware of how their choices are being shaped. Many AI platforms, particularly those developed by profit-driven companies, may prioritize revenue over user well-being, subtly nudging individuals toward actions that align with corporate goals [17].

The European Commission’s Ethics Guidelines for Trustworthy AI highlights this concern:

"AI should not subordinate, deceive or manipulate humans, but should instead complement and augment their skills" [17].

To uphold this principle, AI habit coaching platforms must prioritize user autonomy over metrics like engagement or profitability. This can be achieved by implementing safeguards such as:

- Clear communication about how AI systems work and how user data is utilized.

- Transparent policies that allow users to understand and control the influence of AI on their decisions.

- Human oversight to monitor AI outputs and ensure ethical practices.

By taking these steps, platforms can reduce the risk of manipulation and focus on empowering users without compromising their independence.

Long-Term Effects on Mental and Emotional Health

The ethical concerns surrounding AI habit coaching don’t stop at immediate manipulation. Prolonged reliance on AI tools can have profound effects on mental and emotional health. Over time, users may lose critical thinking skills or become overly dependent on AI systems, which could weaken their ability to navigate complex situations independently [20][21].

This issue is particularly pressing in mental health contexts. Mental health disorders account for 16% of the global disease burden, and depression and anxiety alone cost the global economy about $1 trillion annually due to lost productivity [18]. The stakes are high, and rushing to implement AI solutions without proper safeguards could do more harm than good.

Dr. John Torous, director of digital psychiatry at Beth Israel Deaconess Medical Center, emphasizes the need for caution:

"If we go too fast, we’re going to cause harm. There’s a reason we have approvals processes and rules in place. It’s not just to make everything slow. It’s to make sure we harness this for good" [19].

The risks extend to societal impacts as well. For example, some AI tools have been found to promote harmful content related to eating disorders 41% of the time, highlighting the dangers of poorly designed systems [19].

Additionally, concerns about data sharing amplify these risks. Dr. Darlene King, chair of the Committee on Mental Health IT at the American Psychiatric Association, warns:

"Users need to be cautious about asking for medical or mental health advice because, unlike a patient-doctor relationship where information is confidential, the information they share is not confidential, goes to company servers and can be shared with third parties for targeted advertising or other purposes" [19].

To address these issues, AI systems must:

- Be transparent about their role in treatment and the limitations of their capabilities.

- Support, rather than replace, therapeutic relationships between users and human practitioners.

- Include human oversight in monitoring AI-driven recommendations.

By focusing on these areas, AI platforms can mitigate risks and ensure that their tools enhance, rather than undermine, mental health care.

Responsible Use of Real-Time Data

The ethical handling of real-time data is another critical concern. While this data enables highly personalized coaching experiences, it also carries significant risks, including privacy violations and the misuse of sensitive information.

Modern AI systems collect an astonishing range of real-time behavioral data, such as location, communication patterns, emotional states, and even sleep cycles. This level of detail creates a comprehensive picture of a user’s private life, making the data far more sensitive than traditional coaching information.

Shockingly, only 10% of organizations have a formal generative AI policy in place [23]. This lack of governance leaves users vulnerable to unethical data practices, as many may not fully grasp how their information is being collected, processed, or shared.

Bias in AI algorithms further complicates the issue. When real-time data is processed through biased systems, it can lead to unfair or discriminatory recommendations. To address this, platforms should:

- Regularly audit algorithms to identify and correct biases.

- Use diverse datasets to ensure fair treatment for all users.

- Minimize data collection, gathering only what is absolutely necessary for the intended purpose [22].

The principle of data minimization is vital here. Users should have clear control over what data is collected and how it is used, with the ability to withdraw consent at any time. Transparency is equally important. As one expert notes:

"Users must be informed when they’re interacting with a machine rather than a human. Deceptive practices can undermine trust and lead to ethical concerns" [24].

Additionally, ethical data usage requires:

- Explicit user consent before collecting or processing data.

- Clear communication about the purpose of data usage.

- Secure data-handling practices, such as anonymization and encryption [22][24].

sbb-itb-d5e73b4

Case Study: Ethical Design in Aidx.ai

Recognized as AI Startup of the Year by the UK Startup Awards (South West), Aidx.ai stands out for its thoughtful approach to ethical AI in habit coaching. This case study highlights how the platform embodies key principles like autonomy, transparency, and data protection, weaving them into its design to tackle ethical challenges head-on. By prioritizing user privacy, responsible AI use, and user empowerment, Aidx.ai offers a compelling example of ethical innovation.

Privacy-First Design

Aidx.ai takes privacy seriously, adopting what its founders call a "privacy-first" philosophy that goes beyond basic compliance. The platform addresses the challenges of real-time data usage with robust, multi-layered protections.

One standout measure is its separation of encryption and storage across different data centers, significantly reducing the risk of breaches. As the founders explain:

"Your data is treated as highly confidential and always stored and transferred fully encrypted in accordance with highest industry standards" [25].

This approach ensures that even if one system is compromised, user data remains secure. The platform is fully GDPR-compliant, though there are limitations with WhatsApp integration due to API restrictions. To address this, EU users are encouraged to use Telegram for mobile connectivity.

A particularly innovative feature is the Incognito Mode, which allows for completely private sessions with automatic data deletion. This feature is especially relevant in light of research showing that 75% of people are open to AI-driven mental health support, with men being three times more likely to confide in AI therapy than in human therapists [26].

The platform also emphasizes user control over data. As the founders state:

"You’re in control: You can delete all your data at any time through the web chat settings or by contacting us via email, as outlined in our privacy policy" [25].

In addition to these privacy safeguards, Aidx.ai enhances user experiences with advanced therapeutic intelligence tailored to individual needs.

Adaptive Therapeutic Intelligence (ATI) System

Aidx.ai’s proprietary Adaptive Therapeutic Intelligence (ATI) System™ showcases how AI can provide personalized support without resorting to manipulative techniques. Drawing from multiple therapeutic approaches – such as Cognitive Behavioral Therapy (CBT), Acceptance and Commitment Therapy (ACT), Dialectical Behavior Therapy (DBT), and Neuro-Linguistic Programming (NLP) – the system avoids a one-size-fits-all model. Instead, it adjusts to each user’s emotions, goals, and history, offering a coaching experience that respects individual differences.

The platform also includes specialized modes to cater to diverse needs. For instance, Microcoaching mode delivers quick, focused 5-minute sessions tailored for busy individuals, while Embodiment mode helps users envision future outcomes while preserving their decision-making autonomy. The voice-enabled interface further enhances accessibility, allowing users to integrate the platform into their daily routines effortlessly.

Practitioner Mode for Human-AI Collaboration

Aidx.ai ensures that human oversight remains central to its approach through its Practitioner Mode, addressing concerns about AI replacing human involvement in mental health and coaching. This feature allows licensed coaches and therapists to complement the AI’s efficiency by managing client relationships, assigning tasks, tracking progress, and staying connected to their clients’ emotional well-being.

The platform reinforces its commitment to confidentiality with a clear policy:

"Absolute confidentiality: No humans will read your data" [25].

This ensures that while user data remains secure, professional practitioners can still provide meaningful support when needed. By combining AI-driven affordability – reducing therapy costs by up to 80% compared to traditional sessions [26] – with ethical design, Aidx.ai makes mental health support more accessible without compromising on standards.

The founders underscore their dedication to ethical practices with this statement:

"Your data is sacred: We will never sell or share your information" [25].

Through its privacy-first approach, innovative therapeutic tools, and emphasis on human-AI collaboration, Aidx.ai demonstrates how ethical principles can be seamlessly integrated into both the philosophy and functionality of AI platforms.

Future Approaches to Ethical AI Habit Coaching

As AI habit coaching continues to evolve, the need for trustworthy and ethical systems has never been more pressing. With rapid advancements and shifting regulations, the focus is on strategies that empower users, ensure accountability, and encourage collaborative development. Let’s explore some of the key approaches shaping the future of ethical AI habit coaching.

Dynamic Consent and User Empowerment

Traditional, static consent models no longer fit the needs of adaptive AI systems. Enter dynamic consent – an approach increasingly mandated by regulations like the EU AI Act, especially for high-risk systems [27].

Dynamic consent builds on established data privacy principles, offering users more control by allowing them to give or withdraw consent for specific data uses. Tools like Consent Management Platforms (CMPs) make this process more user-friendly, providing dashboards where individuals can adjust their preferences as needed. For instance, New Zealand’s government services have adopted a seamless integration of consent preferences across departments, enhancing both privacy and user experience. Additionally, privacy-preserving technologies like federated learning enable personalization without centralizing sensitive user data [27].

These advancements not only empower users but also lay the groundwork for greater transparency and accountability in AI systems.

Independent Algorithm Oversight

While user control is critical, ensuring the reliability and fairness of algorithms is just as important. As AI coaching technologies expand, independent reviews and oversight are becoming essential [16]. However, most research in this field has narrowly focused on issues like data privacy, security, and bias, leaving broader ethical concerns underexplored [28].

To address this, robust bias detection and mitigation strategies are crucial for fostering equitable interactions across diverse user groups. Certification programs, similar to those for human coaches, could be developed to assess AI platforms on privacy, ethics, and effectiveness. These certifications would serve as trusted markers for users. Moreover, regular third-party audits of algorithms and data practices are gaining traction – not just to meet regulatory demands but also to build trust through transparency. Many organizations are also forming ethics committees to evaluate practices related to nudging, data usage, and consent protocols [16].

Collaborative, Multidisciplinary Efforts

Ethical AI habit coaching isn’t just about technical fixes; it requires collaboration across disciplines. Tackling the complex ethical issues in AI coaching means bringing together AI developers, ethicists, mental health professionals, and policymakers. For example, culturally sensitive mental health assessments rely on a blend of AI ethics, psychology, and global health expertise. Researcher Farinu Hamzah highlights this need:

"Ensuring culturally valid AI-driven mental health assessments requires a multidisciplinary approach involving AI ethics, psychology, and global health perspectives. Addressing these issues is essential for equitable and effective mental health care worldwide" [31].

Diverse teams are vital for identifying biases and cultural blind spots, ensuring that AI tools are designed with inclusivity and fairness in mind. These collaborations also encourage creative problem-solving, helping address ethical dilemmas that might otherwise be overlooked [30]. By integrating multiple perspectives, developers can create frameworks that prioritize accessibility and affordability while respecting community-specific needs [29].

The future of ethical AI habit coaching depends on this collective effort – where researchers, clinicians, developers, policymakers, and users work hand-in-hand to ensure AI is deployed responsibly and effectively [29].

Conclusion and Key Takeaways

The world of AI habit coaching is filled with potential, but it also comes with significant ethical responsibilities. As we’ve explored, advancing in this space means finding the right balance between innovation and ethical accountability, always keeping users at the forefront.

At the heart of ethical AI systems are principles like transparency and user autonomy. These values, along with fairness, data privacy, and accountability, form the foundation for creating tools that genuinely serve users’ best interests. For instance, initiatives that prioritize data consent have seen a 72% positive response rate, while transparent AI policies have boosted consumer trust by 15% – clear evidence of the importance of user-first designs and human oversight features like Practitioner Mode [32][1][3]. While AI can quickly identify logical inconsistencies and emotional cues, fewer than 20% of coaches excel at addressing emotional depth [2]. This highlights why tools like Practitioner Mode are so important – they enhance collaboration between AI and human professionals, not as a replacement, but as a way to amplify human capabilities.

Privacy-focused designs are proving to be more than just ethical – they’re a competitive edge. Features like Aidx.ai’s Incognito Mode, which deletes sessions after 30 minutes, and strict GDPR compliance are excellent examples of how ethical considerations can drive trust and adoption. These measures ensure user data is handled with care, only involving human oversight when legally required.

However, ethical commitments can’t stop at design. Many tech companies are now forming AI ethics committees, and ethics training has been shown to improve decision-making [33]. As Brian Green from Santa Clara University’s Markkula Center for Applied Ethics puts it:

"Employees not only need to be made aware of these new ethical emphases, but they also need to be measured in their adjustment and rewarded for adjusting to new expectations" [34].

Looking to the future, dynamic consent, independent algorithm oversight, and collaboration across disciplines are becoming essential. The EU AI Act’s call for dynamic consent in high-risk systems marks a shift toward stricter ethical standards and higher user expectations.

For organizations venturing into AI habit coaching, a strong ethical foundation is non-negotiable. Regular bias audits, user education on AI’s limitations, and continuous human oversight are critical steps [3][32]. These practices align with the broader vision of ethical AI habit coaching we’ve discussed.

Ultimately, the true measure of success in this field isn’t just user engagement or behavior change – it’s whether users feel empowered, respected, and supported in their growth. By embedding ethical principles into every stage of design and implementation, AI habit coaching can become a powerful tool for personal development, all while safeguarding human dignity and autonomy.

FAQs

How do AI habit coaching systems support personal growth while respecting user independence?

AI habit coaching systems are designed to encourage personal growth while keeping the user’s independence front and center. By offering tailored guidance that aligns with each person’s unique goals, preferences, and progress, these systems provide support that feels both relevant and actionable. Using advanced algorithms, they deliver customized feedback and practical suggestions, giving users the tools they need to make their own choices while staying motivated and informed.

Maintaining user autonomy also means prioritizing ethical practices like transparency and data privacy. When AI systems clearly explain how they collect, store, and use data, they build trust and create a sense of security. This thoughtful balance between offering support and respecting independence helps users form lasting habits and achieve meaningful personal growth.

How can we prevent bias in AI-powered habit coaching tools?

Preventing bias in AI-powered habit coaching tools calls for deliberate and well-planned approaches. A key step is ensuring the use of diverse and well-rounded training data. When the AI is trained on data that captures a broad spectrum of demographics, experiences, and viewpoints, it’s better equipped to serve a wide audience. On the flip side, biased or incomplete datasets can result in unfair or skewed outcomes, making careful data selection and review absolutely critical.

Another essential practice is continuous monitoring and improvement of the AI system. Regularly evaluating the system’s performance, incorporating user feedback, and making necessary adjustments can help address biases that may appear over time. This ongoing process not only fine-tunes the tool but also improves its fairness and overall effectiveness. By combining these strategies, AI habit coaching tools can become more ethical and inclusive in their design and operation.

How do privacy laws like GDPR and HIPAA influence the design of AI habit coaching platforms?

Privacy regulations like GDPR and HIPAA are key in shaping the design and operation of AI habit coaching platforms. Under GDPR, platforms must limit data collection to what’s absolutely necessary, obtain clear and informed user consent, and maintain transparency about how personal information is handled. These requirements ensure users retain control over their data while still allowing AI systems to deliver personalized coaching experiences.

On the other hand, HIPAA focuses on the protection of sensitive health information, mandating strict security measures. Platforms must incorporate secure storage solutions, encrypted communication channels, and stringent access controls to meet these standards. Together, these laws push AI platforms to prioritize data privacy, security, and user trust, creating systems that are both ethical and compliant.