Cognitive distortions are mental habits that skew how we perceive reality, often leading to negative emotions and mental health challenges like anxiety and depression. AI is now being used to identify these patterns in real-time, offering new tools for mental health support.

Key points:

- AI can detect distortions like catastrophizing, overgeneralization, and "should" statements using Natural Language Processing (NLP).

- Machine learning models analyze text, speech, and behavior to find patterns that indicate distorted thinking.

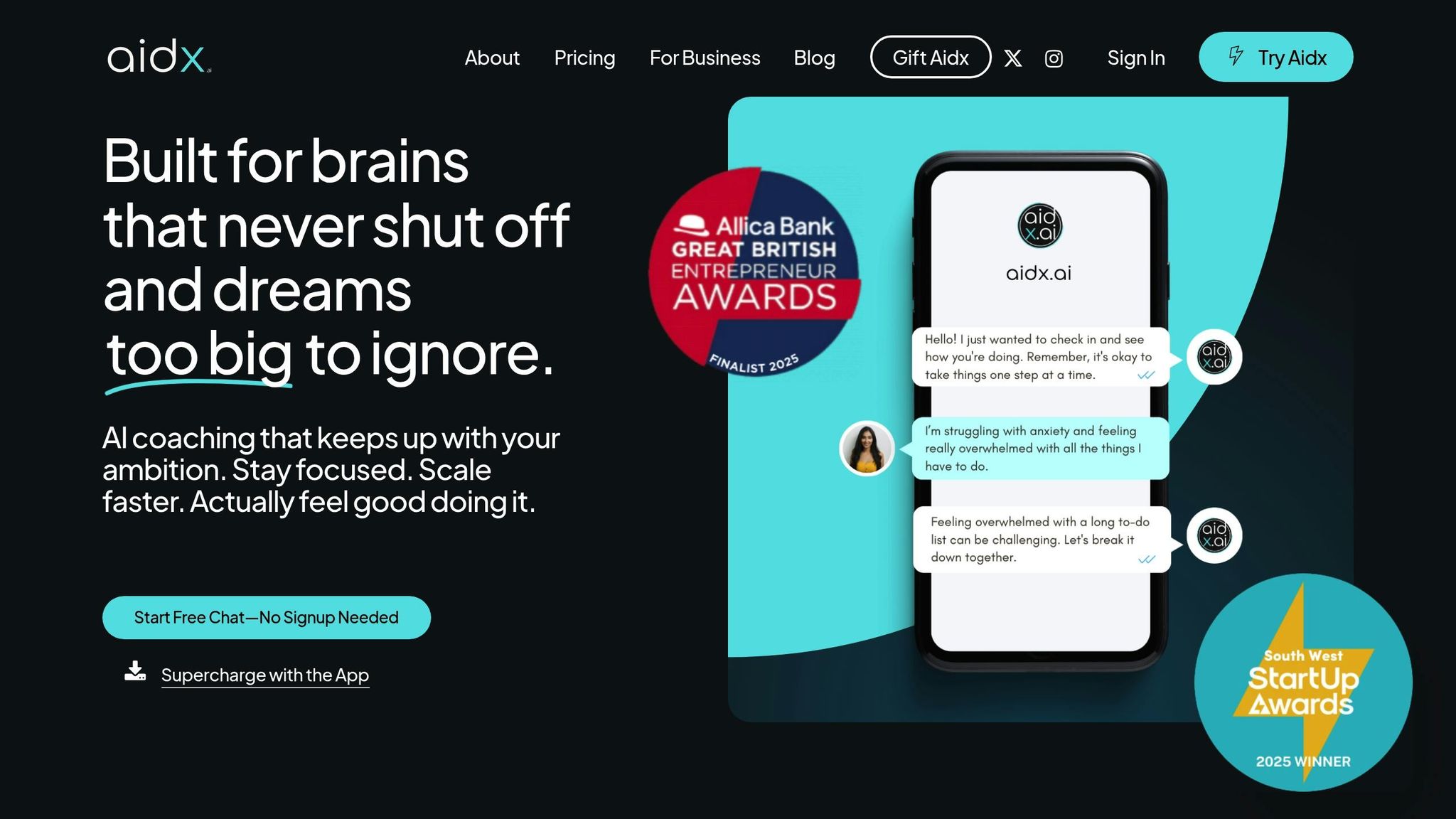

- Tools like Aidx.ai provide personalized mental health support by identifying these distortions and offering real-time feedback.

- Challenges remain, including data limitations, bias, and ethical concerns, but advancements like multimodal integration and explainable AI are addressing these issues.

AI is not replacing therapists but enhancing their ability to help clients by identifying thought patterns that may otherwise go unnoticed. This technology is also making mental health support more accessible through apps and platforms. While hurdles like data diversity and transparency need attention, AI’s role in mental health care is growing steadily.

Types of Cognitive Distortions AI Can Identify

Main Cognitive Distortions

AI systems have proven effective at pinpointing specific cognitive distortions in human communication, especially in text-based exchanges between clients and mental health professionals. A study conducted by the University of Washington highlights five primary distortions that AI can reliably detect [2].

One of the most recognizable patterns is mental filtering, where a person focuses solely on negative details and disregards anything positive. For example, if someone says, "The entire day was ruined because I spilled coffee on my shirt", AI can identify this tendency to zero in on the negative while ignoring other aspects of the day.

Jumping to conclusions is another distortion AI can detect, encompassing both mind reading and fortune telling. Mind reading involves assuming what others are thinking without evidence, while fortune telling predicts negative outcomes without justification. Statements like "I know she thinks I’m incompetent" or "This presentation will definitely be a disaster" are clear examples that AI can pick up on.

Catastrophizing – blowing problems out of proportion and imagining worst-case scenarios – is another distortion AI identifies. A phrase like "If I fail this test, my entire future is over" signals this type of exaggerated thinking [3].

AI also recognizes "should" statements, which often reflect unrealistic expectations or self-imposed pressure. Sentences such as "I should always be perfect" or "People should never disagree with me" indicate rigid thinking patterns that contribute to stress and emotional discomfort.

Lastly, overgeneralizing involves drawing sweeping conclusions from a single event. For instance, assuming "I failed once, so I’ll always fail" is a clear marker of this distortion. AI systems detect these patterns through specific linguistic cues [3] [5].

Beyond these core distortions, AI technology is expanding to identify others, including all-or-nothing thinking, emotional reasoning, labeling and mislabeling, personalization, and discounting the positive [5]. Each of these has distinct language markers that machine learning algorithms are trained to recognize.

A University of Washington analysis of 7,354 text messages revealed that the average distortion frequency per client was 48.5, underscoring how common these patterns are in everyday communication [2]. The study also found that individuals with psychiatric conditions tend to exhibit more frequent and severe distortions [3].

Understanding these distortions lays the foundation for why AI focuses on detecting them.

Why AI Focuses on These Patterns

AI systems prioritize these specific cognitive distortions because they are measurable and identifiable through distinct language patterns. Unlike abstract psychological concepts, distortions like mental filtering or catastrophizing appear in clear, recognizable ways that machine learning models can analyze [3] [5].

Decades of Cognitive Behavioral Therapy (CBT) research have shown that these distortions play a central role in conditions like depression and anxiety. Since correcting distorted thinking is a key goal of CBT, AI tools that can identify these patterns offer meaningful support for mental health professionals [1].

"This study demonstrated that NLP models can identify cognitive distortions in text messages between people with serious mental illness and clinicians at a level comparable to that of clinically trained raters." – Researchers from the University of Washington School of Medicine [3]

Studies back up the effectiveness of AI in this area. For instance, models using bidirectional encoder representations have achieved performance metrics comparable to clinical experts, with F1 scores around 0.62-0.63 [2].

These distortions are also valuable for AI to monitor because they act as early warning signs of declining mental health. By identifying distorted thinking in real time, AI can provide timely feedback and intervention opportunities. This capability isn’t limited to clinical settings – it extends to wellness platforms like Aidx.ai, which uses its ATI System™ to flag cognitive distortions in everyday conversations.

When distortions are addressed, individuals often experience reduced negative emotions and an improved mental state [1]. This creates a clear link between AI’s detection capabilities and positive therapeutic outcomes.

AI’s strength lies in its ability to process massive amounts of text and spot subtle linguistic cues that indicate distorted thinking. As these systems analyze more data, they continually improve, becoming even better at distinguishing between healthy and distorted thought patterns [2].

How AI and Machine Learning Detect Cognitive Distortions

Natural Language Processing for Text Analysis

Natural Language Processing (NLP) is the backbone of AI’s ability to pinpoint cognitive distortions in human communication. By examining word choices and context, NLP can detect distorted language patterns in real-time, flagging instances of catastrophizing, mental filtering, or other cognitive distortions.

These algorithms break down text or speech into smaller elements, searching for keywords, sentiment indicators, and contextual clues. For example, a fine-tuned BERT (Bidirectional Encoder Representations from Transformers) model has been shown to perform on par with trained clinicians in identifying distortions in text-message exchanges between clients and therapists [2].

"NLP methods can be used to effectively detect and classify cognitive distortions in text exchanges, and they have the potential to inform scalable automated tools for clinical support during message-based care for people with serious mental illness." – Justin S. Tauscher, Ph.D. et al. [2]

One of the standout advantages of NLP is its ability to process vast amounts of text with consistent accuracy. Unlike human reviewers, who might experience fatigue or subjective biases, NLP systems can analyze thousands of messages without losing efficiency or precision.

These capabilities are now integrated into advanced platforms that analyze conversations in real-time, providing immediate feedback. Such advancements in NLP lay the groundwork for even more sophisticated machine learning models.

Machine Learning Models and Training

Machine learning models are at the heart of AI’s capacity to identify cognitive distortions. Using supervised learning, these systems are trained on thousands of annotated texts, with each sample labeled to indicate whether it contains distortions and, if so, the specific type. This helps the AI learn the subtle language patterns tied to different distortions.

Various machine learning algorithms, including logistic regression, support vector machines (SVM), and neural networks, have been used to detect cognitive distortions effectively [9] [11]. However, BERT has outperformed these traditional methods, excelling in understanding the context and relationships between words [2].

Training datasets often come from crowdsourced mental health texts and online therapy platforms, with some models achieving F1 scores as high as 0.88 [9]. To address class imbalance – where certain distortions are more common than others – researchers employ techniques like oversampling and data augmentation, ensuring less frequent distortions are also recognized [10].

Machine learning models also benefit from continuous learning, improving their accuracy as they process more data over time. This ongoing refinement helps tackle the complexities of detecting cognitive distortions.

Current Challenges in AI Detection

Despite the progress, AI systems still face hurdles in detecting cognitive distortions, underscoring the need for further advancements before they can fully complement or replace human judgment in mental health care.

One significant challenge is the lack of structured datasets. Cognitive distortion detection relies on limited training sets that may not reflect the full range of human communication patterns [4]. Short texts, in particular, can lack the necessary context for accurate detection [4].

To address this, researchers like Alhaj and colleagues have worked on enhancing short textual representations using transformer-based topic modeling tools like BERTopic and AraBERT, improving classification accuracy even with limited text [4].

Another issue is imbalanced data, where certain distortion types are underrepresented in training datasets. Techniques like data augmentation and domain-specific models, such as MentalBERT, have shown promise in addressing this imbalance [4].

Large Language Models (LLMs) also face unique difficulties. Studies show they often over-diagnose distortions, flagging benign statements as problematic, especially in culturally specific contexts like Chinese social media [4]. To tackle this, frameworks like the Diagnosis of Thought (DoT) and later the ERD framework (extraction, reasoning, and debate) were developed. The ERD framework, in particular, uses multiple LLM agents to classify distortions more accurately, significantly reducing false positives [4].

Ethical concerns further complicate AI detection. Issues such as technological bias, privacy risks, and false positives could lead to unintended consequences, including exacerbating health inequities [12].

Despite these obstacles, the field is making strides. Recent innovations include multimodal datasets that combine text, audio, and visual data. Singh and colleagues proposed a multitask framework integrating these diverse data types, achieving better performance than existing models [4]. Addressing these challenges is essential for advancing AI’s role in real-time mental health support.

Practical Applications and Benefits of AI in Cognitive Health

Mobile Apps and AI Chatbots

AI-powered mental health apps are making strides in helping users recognize and address cognitive distortions in their everyday lives. These platforms combine real-time feedback, advanced pattern recognition, and tailored interventions to deliver support exactly when it’s needed.

For example, AI journaling apps analyze user entries in real time, identifying language patterns that signal distorted thinking. They then provide personalized prompts to steer users toward healthier thought processes. Using natural language processing (NLP), these systems can detect emotional intensity and behavioral trends, offering insights into negative thought patterns as they emerge.

Many mobile AI applications have proven effective in detecting cognitive distortions across diverse groups of users. What sets these tools apart is their ability to intervene immediately when negative spirals begin. By offering instant feedback and guidance, they help users address issues before they escalate into more serious emotional challenges. This kind of real-time support has been shown to significantly improve overall well-being.

User Benefits and Results

Research highlights the tangible mental health benefits of AI-driven detection systems. These tools have demonstrated positive outcomes in several critical areas of psychological well-being.

One area where users see noticeable improvement is emotional regulation. Studies show that 67% of users improved their ability to manage emotions within just eight weeks of using AI journaling platforms [13]. This progress is largely due to the AI’s ability to pinpoint specific emotional triggers and help users unpack the thought patterns contributing to instability.

Depression symptoms also respond well to AI interventions. A 2024 study conducted by UCSF found a 22% reduction in depression symptoms over two months among participants using AI journaling tools [13]. Similarly, AI-assisted cognitive behavioral therapy (CBT) apps report a 31% decrease in anxiety symptoms compared to traditional journaling methods [13]. Beyond reducing symptoms, users gain self-awareness and develop consistent journaling habits, which help them better understand their emotional triggers and make more informed decisions about self-care.

Gamification elements in many AI mental health apps further enhance user engagement. Research shows that incorporating game-like features can boost user retention and activity by up to 50% [14]. This sustained engagement ensures long-term benefits from cognitive distortion detection tools. Additionally, CBT-based interventions delivered through apps have been shown to reduce depression and anxiety symptoms by up to 50% for many users [14].

How Aidx.ai Works

Aidx.ai takes these advancements a step further with its Adaptive Therapeutic Intelligence (ATI) System™, which offers a personalized approach to managing cognitive distortions. This proprietary system provides real-time mental health support tailored to each user’s emotional needs and therapeutic goals. Unlike static tools, the ATI System™ evolves with the user, learning from every interaction to better understand their thought patterns, triggers, and responses.

The platform integrates evidence-based methods like CBT, dialectical behavior therapy (DBT), acceptance and commitment therapy (ACT), NLP, and performance coaching to address cognitive distortions as they arise. What sets Aidx.ai apart is its voice-enabled interaction, allowing users to engage in natural conversations via advanced speech recognition. This makes the platform accessible during daily routines, whether users are commuting, multitasking, or simply unable to type.

Aidx.ai also offers multi-platform integration, ensuring users can access support through web apps, progressive web apps (PWA), and even Telegram. This flexibility allows users to engage with the platform in the way that feels most comfortable for them.

To cater to different needs, Aidx.ai includes specialized modes. The Microcoaching mode offers quick, five-minute sessions for busy professionals who need immediate cognitive checks during their workday. Embodiment mode helps users visualize and physically connect with healthier thought patterns, while Incognito mode provides fully private sessions that auto-delete after 30 minutes – ideal for sensitive situations.

A comprehensive tracking system monitors emotional and performance metrics, giving users clear evidence of their progress. Goal-setting features and multi-channel reminders – via push notifications, messaging apps, and email – help users stay engaged and focused on their mental health journey.

Privacy and security are top priorities for Aidx.ai. With GDPR compliance, encrypted data storage, and no human oversight unless legally required, users can feel confident in sharing their thoughts without fear of exposure.

Beyond individual use, Aidx.ai extends its capabilities to professionals and organizations. Aidx for Practitioners allows therapists and coaches to manage client relationships, assign tasks, and track progress between sessions. Meanwhile, Aidx Corporate provides teams with confidential coaching access to build emotional resilience and reduce burnout, while offering anonymous insights into overall team well-being.

This integrated approach positions Aidx.ai as more than just a mental health tool – it becomes a trusted companion for personal growth and emotional resilience, seamlessly blending cognitive distortion detection into a broader support system.

Cognitive Distortions: Cognitive Behavioral Therapy Techniques 18/30

sbb-itb-d5e73b4

Current Challenges and Future Developments

Building on the achievements and shortcomings of current applications, this section explores the challenges that remain and the advancements on the horizon for AI-driven cognitive distortion detection.

What Works and What Doesn’t

AI systems can quickly identify cognitive distortions, but their performance still has room for improvement. Current models achieve moderate accuracy, with one study reporting a 73% detection rate when analyzing personal blogs [15], and another showing a 74% accuracy in predicting the likelihood of an individual benefiting from CBT [4].

However, these systems often falter when faced with more nuanced distortions. Large Language Models (LLMs) in particular tend to over-diagnose, flagging benign statements as distortions in certain contexts [6].

Data quality and representation also remain key issues. Research indicates that 42% of studies suffer from data deficiencies, while 24% highlight a lack of demographic diversity [16]. Additionally, the absence of a "human touch" limits the effectiveness of these tools. Nick Haber, Assistant Professor at the Stanford Graduate School of Education, underscores this limitation:

"LLM-based systems are being used as companions, confidants, and therapists, and some people see real benefits, but we find significant risks, and I think it’s important to lay out the more safety-critical aspects of therapy and to talk about some of these fundamental differences." [17]

Another challenge lies in the lack of transparency. Users and mental health professionals often find AI conclusions difficult to understand. As Vilaza and McCashin explain:

"Explicability in AI is the capacity to make processes and outcomes visible (transparent) and understandable." [16]

Bias is another persistent issue in AI models. Jared Moore, a PhD candidate in computer science at Stanford University, points out:

"Bigger models and newer models show as much stigma as older models. The default response from AI is often that these problems will go away with more data, but what we’re saying is that business as usual is not good enough." [17]

Addressing these challenges is critical for shaping the future of AI in this field.

Areas for Future Research

To tackle these issues, researchers are focusing on several key areas:

- Improving Dataset Diversity

Many studies emphasize the need for more diverse and balanced datasets. For example, Alhaj et al. propose using transformer-based topic modeling algorithms like BERTopic, paired with pre-trained language models such as AraBERT, to enrich short textual data in Arabic contexts [6]. Similarly, Ding et al. demonstrate how data augmentation and domain-specific models like MentalBERT can address data imbalance [6]. - Advancing Multimodal Integration

Combining text, audio, and visual data is a promising direction. Singh et al. developed a multitask framework that integrates these modalities, significantly improving cognitive distortion detection [6]. - Enhancing AI Interpretability

Future systems must incorporate explainable AI (XAI) techniques to make their decision-making processes clearer. This would help users and professionals understand not just what the AI detects, but also why it reaches specific conclusions [19]. - Developing Hybrid AI-Human Models

Instead of replacing human therapists, research is exploring ways for AI to assist therapists in real time. This collaborative approach could preserve the essential human connection while improving therapeutic outcomes [18]. - Personalized Therapy and Emotional Intelligence

Personalization is becoming a priority. As Anna Twomey suggests:

"Personalized Medicine 2.0: Going beyond genomics to include real-time biometrics for custom-tailored treatment plans – this catchphrase will be big." [20]

Integrating emotional intelligence into AI systems could also lead to more natural, supportive interactions by making the AI more responsive to users’ emotional states [18]. - Cross-Cultural Validation

Ensuring AI models can accurately interpret cognitive distortions across diverse populations is essential for broader applicability. - Real-Time Intervention

Researchers are exploring how AI can offer immediate support during mental health crises, with the ability to escalate cases to human professionals when necessary [18].

Momentum in the field is evident. A 2022 survey revealed that 77% of organizations are prioritizing AI regulations as part of their policies, and 80% plan to invest in ethical AI development [7]. These efforts suggest that the future of AI in mental health care is both dynamic and filled with potential.

Privacy, Security, and Ethical AI Use

When AI systems delve into our thoughts and emotions, safeguarding sensitive information becomes a top priority. Mental health AI tools, in particular, handle deeply personal data and demand stricter protections than most other applications.

Data Protection Standards

Mental health data security is no small matter. In 2024, a major therapy platform faced a $7.8 million fine for sharing Protected Health Information (PHI) without proper HIPAA authorization [21]. Privacy concerns are widespread – 73% of users rank data privacy as a top priority, and many abandon mental health apps due to these worries [21] [23]. Alarmingly, 75% of mental health apps fail to meet privacy standards, with only 37% clearly disclosing their data collection practices [23]. On the flip side, apps with strong privacy measures see a 40% boost in user retention [23].

Privacy laws vary across regions. In the U.S., HIPAA governs health data protection, while the EU enforces the GDPR, and California adds further requirements under the CCPA. Often, companies must adopt a hybrid compliance strategy to meet these diverse regulations [21].

Technical safeguards are the backbone of any robust data protection strategy. Leading practices include encrypting data at rest using AES-256 and in transit with TLS 1.3 featuring Perfect Forward Secrecy. Adhering to data minimization principles – collecting only essential information and limiting retention periods – also strengthens security [21] [22].

One standout example comes from a meditation app that employs a dual-region approach. It stores PHI in a HIPAA-compliant AWS enclave in Virginia while processing non-PHI data in Dublin for EU users. This strategy not only addresses regional privacy concerns but also resulted in 40% faster growth among EU users [21].

Privacy-by-design principles should be baked into a platform’s architecture from the start. As one expert put it:

"The most successful mental health platforms will be those that build privacy and compliance into their core architecture from the beginning, rather than attempting to retrofit protections after development." [21]

User consent must also be clear and actionable. Dynamic consent tools, like toggle-based interfaces and geofenced modals, empower users to control how their data is shared based on local regulations [21].

But while technical safeguards are vital, ethical guidelines are equally important in shaping responsible AI development.

Ethical Considerations in AI Development

Ethical AI development requires addressing issues like bias, transparency, and human oversight. Transparency, in particular, is critical to building trust. The American Psychological Association (APA) highlights this in their guidance:

"Psychologists have an ethical obligation to obtain informed consent by clearly communicating the purpose, application, and potential benefits and risks of AI tools. Transparent communication maintains patient/client trust and upholds Principle E: Respect for People’s Rights and Dignity." [24]

Bias is another significant challenge. AI systems trained on limited or skewed datasets risk perpetuating disparities in mental health care. To counter this, developers must use diverse datasets and conduct regular algorithmic reviews to identify and correct biases [24] [26].

Human oversight remains a cornerstone of ethical AI use. AI is meant to support – not replace – professional judgment. The APA reinforces this:

"AI should augment, not replace, human decision-making. Psychologists remain responsible for final decisions and must not blindly rely on AI-generated recommendations." [24]

This is particularly crucial in areas like cognitive distortion detection, where AI can flag potential issues but human professionals must interpret these results in the broader context of an individual’s situation.

Mental health AI users often find themselves in vulnerable states, making them more susceptible to harm or manipulation. The EU AI Act explicitly bans systems that exploit user vulnerabilities to cause harm [25].

| Ethical Consideration | Key Requirements |

|---|---|

| Privacy and Confidentiality | Safeguard patient data to build trust [24] |

| Informed Consent | Clearly communicate the purpose, risks, and benefits of AI tools [24] |

| Bias and Fairness | Use diverse datasets and conduct bias assessments to address disparities [26] |

| Transparency and Accountability | Explain how algorithms work and ensure accountability for their outcomes [24] |

| Human Oversight | Ensure professionals guide final decisions [24] |

| Safety and Efficacy | Test rigorously to identify and mitigate potential risks [26] |

Involving stakeholders – like mental health professionals, patients, privacy advocates, and community representatives – early in the development process can help spot ethical concerns before they become problems [26].

Take Aidx.ai as an example. The platform incorporates privacy and ethical standards into its design, from GDPR compliance to encrypted data storage. It goes a step further by ensuring no human oversight is involved unless legally required. Its Adaptive Therapeutic Intelligence (ATI) System™ blends evidence-based techniques with strong user privacy controls.

To keep up with evolving technology and societal expectations, ongoing evaluations are essential. Regular audits, user feedback loops, and algorithm updates ensure that ethical standards remain a priority over time [26].

Conclusion: AI’s Future in Mental Health Support

Mental health care is undergoing a major shift, with AI-powered tools for detecting cognitive distortions paving the way. What started as basic pattern recognition has grown into advanced systems capable of analyzing speech, text, and behavior in real time. This evolution is reshaping how mental health care is delivered.

The numbers highlight the potential of AI in this space. Globally, depression is the leading cause of disability, yet only 27% of individuals receiving psychological therapy access standardized care [4][8]. AI offers a scalable solution to close this gap. For example, machine learning models can predict the effectiveness of Cognitive Behavioral Therapy (CBT) with about 74% accuracy, while narrative-enhanced AI approaches push this to 75%, compared to traditional methods at just 50% [27].

One of AI’s standout benefits is its ability to tailor treatments. By analyzing factors like genetic predispositions, past treatment outcomes, behavioral patterns, and even real-time physiological data, AI delivers a level of personalization that traditional approaches struggle to match [8]. It moves beyond the outdated one-size-fits-all model.

In practice, AI is already proving its value. Rather than replacing human expertise, it complements it, enhancing the care professionals provide.

Take Aidx.ai, for instance. Its Adaptive Therapeutic Intelligence (ATI) System™ combines evidence-based methods from CBT, DBT, ACT, and NLP, while maintaining strict privacy standards through GDPR compliance and encrypted data storage. With a voice-enabled interface and 24/7 availability, Aidx.ai offers real-time support that goes beyond the limitations of weekly therapy sessions.

Looking ahead, the possibilities are even more exciting. AI algorithms could refine CBT interventions based on a patient’s progress and unique cognitive patterns [8]. Wearable devices with AI capabilities might continuously monitor physiological and behavioral signals tied to mental health [8]. But, for these advancements to succeed, challenges must be addressed. This includes improving conversational AI, reducing bias by using diverse datasets, and ensuring transparency and accountability in AI systems.

The vision for the future is compelling: a world where cognitive distortions are detected and addressed seamlessly, where personalized mental health support is available to everyone, and where seeking help carries less stigma thanks to private, AI-driven solutions. AI doesn’t just extend the reach of mental health care – it amplifies the expertise of professionals, offering hope to millions who face barriers to care.

The transformation is already underway. The real question is no longer if AI will change mental health care, but how quickly and responsibly it can be scaled to make a difference for those who need it most.

FAQs

How does AI recognize cognitive distortions in real-time, and what role does Natural Language Processing (NLP) play?

AI can spot cognitive distortions in real-time by analyzing text for patterns that reveal irrational or biased thinking. These include things like overgeneralization, catastrophizing, or negative self-talk. Natural Language Processing (NLP) plays a key role here, as it allows AI to grasp the context, tone, and structure of language, picking up on subtle hints that suggest distorted thought patterns.

Using advanced NLP models, AI offers instant feedback to help individuals recognize unhelpful thinking habits. This guidance encourages a shift toward healthier and more constructive ways of thinking.

What ethical challenges does AI face in mental health care, and how are they being addressed?

AI’s role in mental health care comes with a set of ethical challenges. These include privacy risks, the possibility of data breaches, algorithmic bias, and concerns about reducing human interaction – an issue particularly relevant for younger individuals. There’s also the danger that AI tools might unintentionally promote harmful stereotypes or behaviors.

To tackle these challenges, developers and regulators prioritize transparency, informed consent, and strong privacy protections. Ethical guidelines stress that AI should support human decision-making, not replace it. By implementing strict regulations and maintaining ongoing oversight, efforts are being made to address bias, safeguard sensitive information, and uphold ethical standards in AI-powered mental health care.

How can AI tools like Aidx.ai enhance traditional therapy and benefit both users and therapists?

AI tools like Aidx.ai bring a fresh dimension to therapy by offering round-the-clock, real-time support, filling the gaps between sessions. These tools deliver personalized guidance, monitor progress, and reinforce therapeutic strategies, helping individuals stay aligned with their mental health objectives.

For therapists, these tools can simplify workflows by identifying patterns in a user’s thoughts and behaviors, offering insights that make treatment plans more targeted and effective. For individuals, they provide instant access to support, detect symptoms early, and suggest practical strategies – empowering users to actively manage their mental health while complementing the progress made in therapy sessions.